Cybersecurity meets Generative AI: Automating Your Compliance Operations

How AI can help you bootstrap cybersecurity compliance operations with less cost, time and overhead.

Automating business operations is the next bold frontier in advanced Large Language Model (LLM) applications. One such area is cybersecurity compliance which often requires specialized skill set to prepare an organization for an audit or assessment against industry standard frameworks or regulatory requirements. This process tends to be mostly manual, time consuming and costly. And it usually repeats itself every year.

With the recent advances in generative AI, an opportunity now exists to augment LLMs with domain-specific knowledge and leverage them to help automate compliance audits.

This post builds on a previous post that laid out the vision for a compliance co-pilot and described how to design a customized AI model for compliance operations. It focuses on using a co-pilot to prepare an organization for compliance audits.

Background

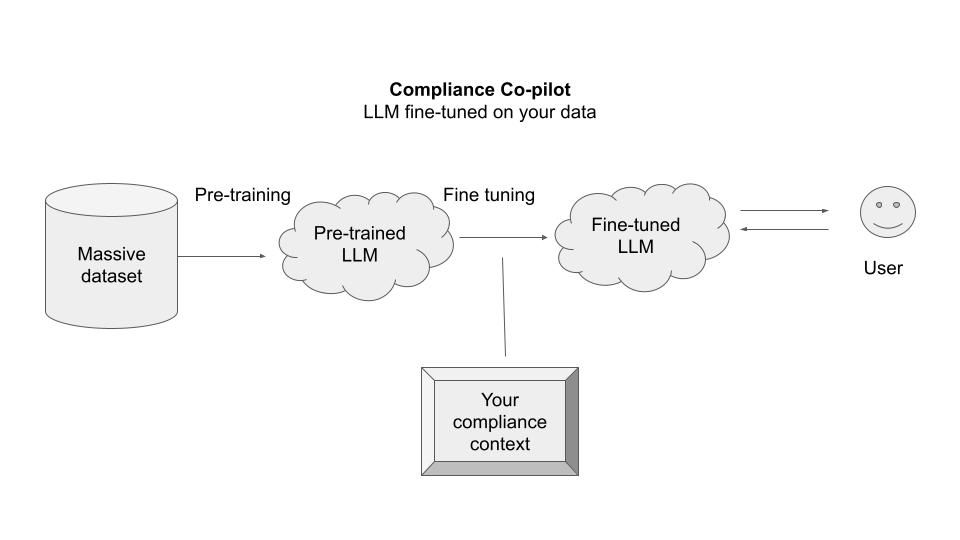

What is a compliance co-pilot? The compliance co-pilot is built upon a pre-trained LLM that can be fine-tuned using the current compliance state of the organization. This state is usually available in the form of existing data sources in the organization (such as policies, product specs, and other system documents) which can be used to augment the inference and generative capabilities of the LLM.

How does a compliance co-pilot help? It helps automate the key steps in your compliance journey to make you audit-ready with less time and money compared to traditional approaches. An "audit-ready" control is one mapped to a specific test and evidence in the target system needed to pass that test.

Today, the path to audit-ready controls goes through professional services and a significant amount of manual process. A true compliance co-pilot will reduce that dependency.

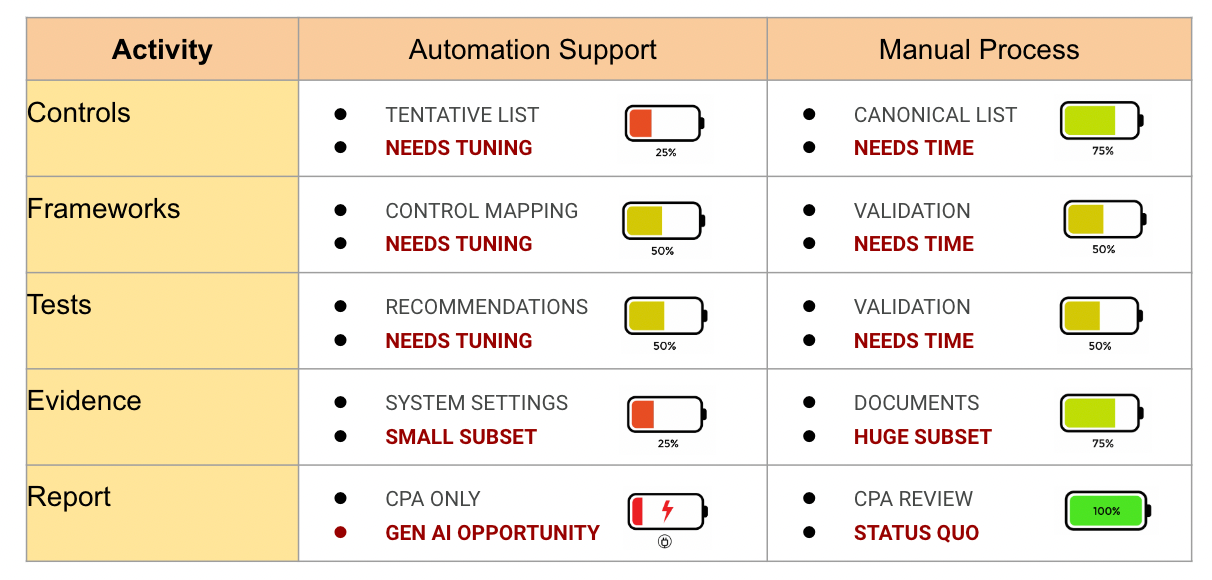

What is the state of art in audit automation? While there is some level of automation support currently available for compliance audits, there is still a considerable amount of manual process requiring significant time and effort.

- Controls: The tools can identify the controls for a given framework, and even across frameworks. However this is a tentative list that needs to be converted into canonical list after manual review. Similarly, the control mapping for the applicable framework(s) also needs to be validated using manual review.

- Tests: The tools can recommend the tests needed to satisfy the applicable controls. However, the tests by default are based on guidance that takes into account the framework(s) but does not take into account the profile and the state of the organization. The tests therefore require manual validation.

- Evidence: The tools can help gather evidence for some tests. However, that support is limited to a small subset of controls where the evidence required can be gathered directly from a system through an API call, e.g., encryption settings for AWS EC2 instances. The larger subset that remains is usually in the form of documents scattered across multiple teams and multiple repositories.

Why is it hard to automate manual process? One of the most important components in the audit is the need for domain expertise. The manual processes in an audit are the domain of compliance experts providing professional services, such as CPA review and validation, and hard to automate in their entirety. A successful co-pilot will have to mimic how a domain expert would operate, and learn to think like they do, to support them with smart (i.e. AI-assisted) review and validation.

How can generative AI capabilities be applied? The current tools do not leverage generative AI capabilities that could help remediate gaps in evidence, as opposed to only identifying them. For e.g., on-demand generative templates can be used for creating missing documentary evidence, or the audit reports for the final review by the CPA, which could help reduce the time and effort required to complete the audit. Leveraging these capabilities presents a major opportunity in end-to-end audit automation.

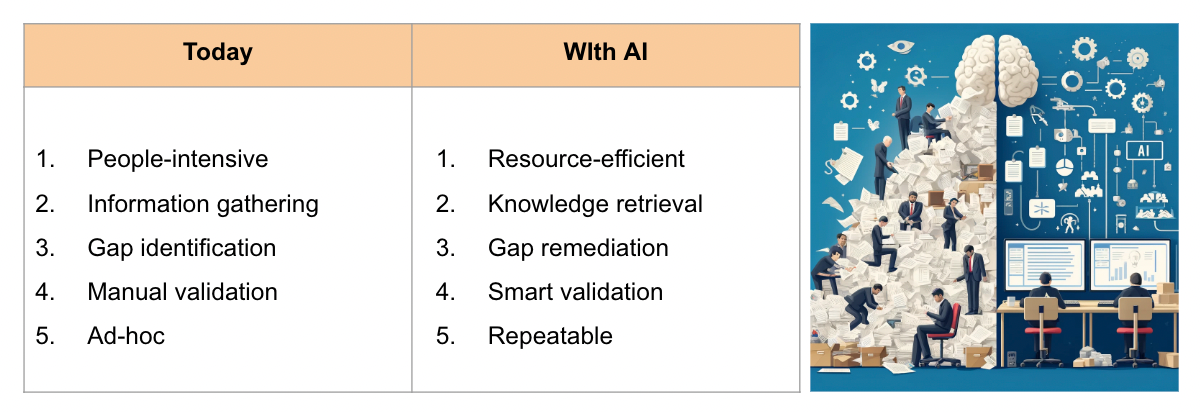

To summarize, here are the potential high-level benefits of using a compliance co-pilot for audits compared to how audits are done today:

Approach

How does an audit with compliance co-pilot work? Imagine this scenario.

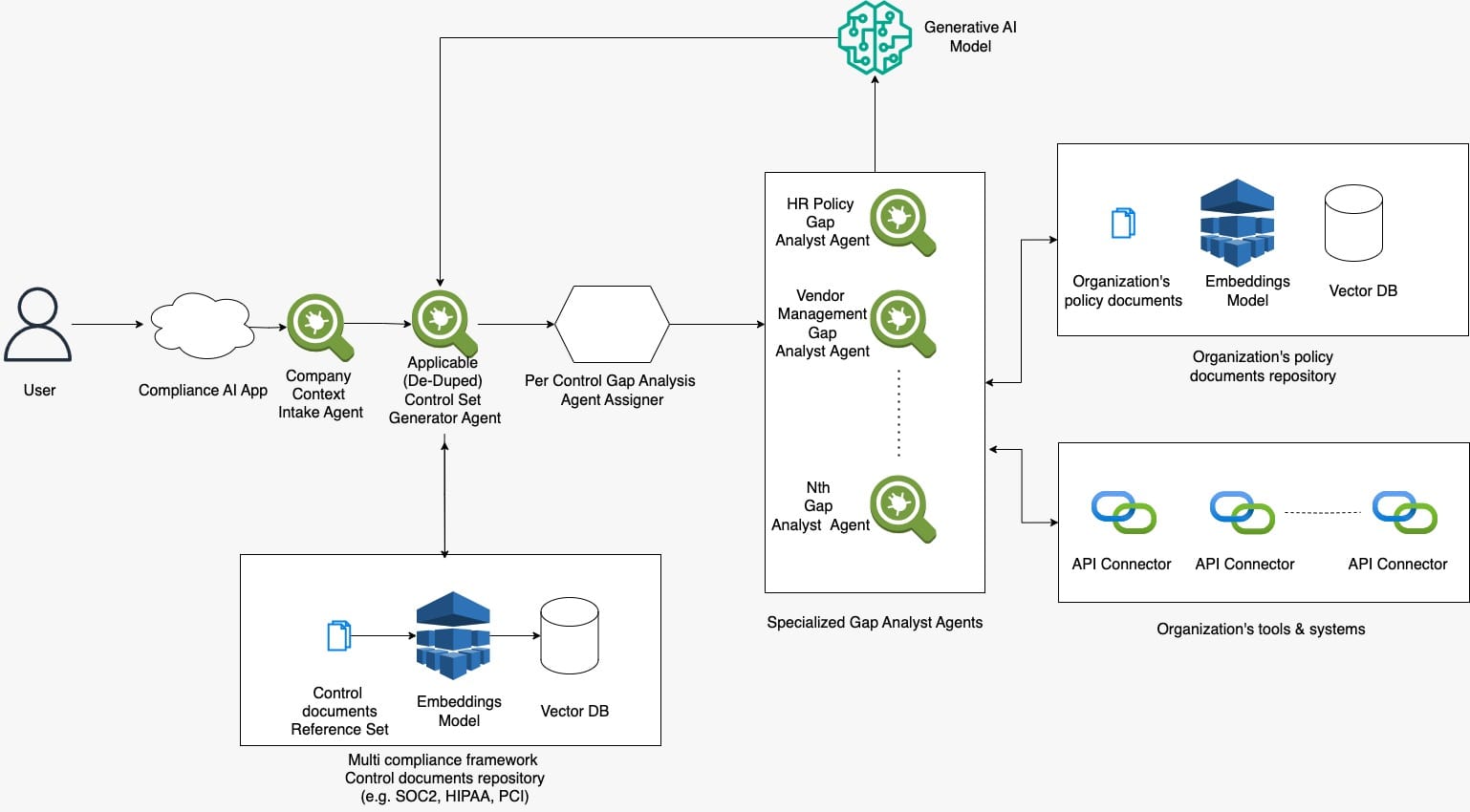

The compliance co-pilot is expected to take as input the profile and state of the organization, and provide as output the level of compliance with applicable obligations in terms of the # of controls that are either fully satisfied, partially satisfied, or not satisfied.

In doing so, it is required to overcome the aforementioned challenges that make it hard to automate the manual processes. This means that it has to meet the following requirements:

- Produce a canonical control list

- Produce and validate control mapping

- Produce and validate tests for the controls

- Retrieve documentary evidence for the tests

- Remediate gaps by generative templates

Finally, the overall requirement is to provide the same level of accuracy as a compliance professional, but with less time and less money. These requirements will guide the solution architecture of compliance co-pilot, outlined below.

Architecture

As previously mentioned, the compliance co-pilot will be built on top of pre-trained LLMs that are customized to address the specific use cases and meet the specific requirements described above.

Below is a proposed reference architecture for such a compliance co-pilot. It uses a combination of AI Agents + RAG (Retrieval Augmented Generation), combined with fine tuning and prompt engineering. As part of the fine tuning process, we also follow the RLHF (Reinforcement Learning from Human Feedback) technique to attempt to make the outputs more accurate, and integrate ethics principles into the model to address governance concerns.

We built a tool that implements this reference architecture, called EazyGRC. It uses OpenAI GPT4 as the base model, LangChain for RAG + AI Agents, FAISS for storage and search library, and using zero-shot learning for initial fine-tuning.

Conclusion

Agentic AI systems can help you bootstrap cybersecurity compliance operations with less cost, time and overhead. This post outlined the motivation, approach, and a reference architecture for designing and implementing a co-pilot to help prepare an organization for compliance audits.

Connect with us

If you would like to reach out for guidance or provide other input, please do not hesitate to contact us. To sign up for access to EazyGRC, please join the waitlist.