Compliance Co-Pilot: A Fine-Tuned AI Model for Security Audits and Assessments

Customized AI models can significantly reduce the burden for organizations in managing their compliance operations.

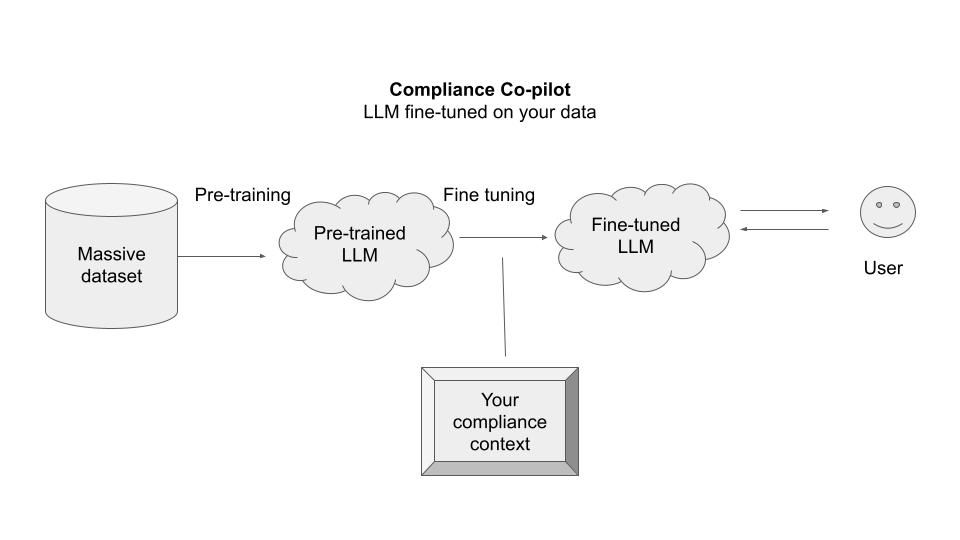

This post builds on a previous post that laid out the vision for AI-assisted data privacy operations. It describes how we can design a customized AI model for compliance operations that is privately hosted and fined-tuned on an organization's own data and policies.

Using this context, the model can be used to automate the cybersecurity and data privacy audits and risk assessments. It can also be used to auto-generate documentation for custom training and compliance reporting for the organization.

Below are the design considerations for such a model, which we will call compliance co-pilot.

Scope

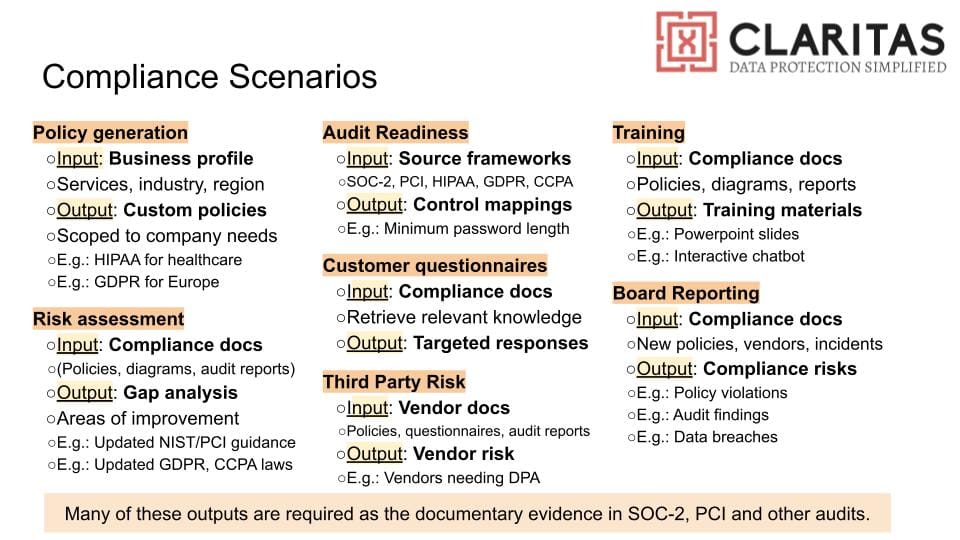

The first consideration when designing any AI model is the scope of actions that the model is expected to perform. In case of compliance co-pilot, the model is expected to perform both extraction and generation tasks.

- The extraction tasks will be used to analyze the compliance documents, such as policies and audit reports, and extract useful information from them, such as compliance obligations and technical controls applicable to the organization.

- The generation tasks will be used to answer questions about compliance as well as create new compliance documents, such as custom training and compliance reporting for the organization.

Base Model

The base model is the existing model that will be used as the starting point to train a custom model for the organization. Extraction tasks will require models that support embedding capability, while generation tasks will require models that support inference and generative capabilities.

Models that provide support for embedding include "Amazon Titan Embeddings", while those that provide support for text-based inference and text generation include "Amazon Titan Text" or "Anthropic Claude". Some models specialize in providing support for image generation, such as "Stable Diffusion".

Train vs. Tune

Once we have decided on the model, an important question is that of whether to train or fine-tune the base model. The answer depends on various factors.

- Training the model requires a massive dataset, as well as extensive resources. It is necessary when there isn't enough context to fine-tune the model.

- Fine-tuning a pre-trained base model is the preferred approach when it is possible. Fine-tuning can be done using prompt engineering. It can either be instruction fine tuning, which involves changing the weights of the model, or parameter-efficient fine-tuning which does not require adjusting the weights. It is much cheaper and less resource intensive than training the model.

In case of compliance co-pilot, a pre-trained model can be fine-tuned using the current compliance state of the organization. This state is usually available in the form of existing data sources in the organization (such as policies, product specs, and other system documents) which can be used to augment the inference and generative capabilities of the AI model. For example, Amazon Bedrock supports Retrieval-Augmented Generation (RAG) workflow by using knowledge bases and agents that work with organization data to build contextual applications.

Applications

The custom model resulting from fine-tuning the base model can then be used for various applications.

- Audits (SOC-2, ISO-27001)

- Once the model has been fine-tuned on existing system state, it will have the capability to extract the relevant controls in place and compare them to standard audit framework controls (such as those from SOC-2 or ISO-27001) that are required for compliance.

- As an output of this process, the model will produce a list of audit-ready controls, as well as a list of controls gaps, that can be used to determine the readiness of the organization.

- The model can apply this process uniformly by rationalizing the requirements across multiple frameworks.

- Assessments (DPIA)

- Similarly, the model that has been fine-tuned on existing system state will have the capability to extract processing activities, including those that pose high risk. It can further analyze the impact of risk based on likelihood of harm as per the applicable legal standards (such as GDPR and CCPA) and applicable mitigating controls that are in place.

- As an output of this process, the model will produce a risk assessment that can be used to inform product decisions as well as meet compliance requirements.

- The model can apply this process uniformly by rationalizing the requirements across multiple state and/or regional laws.

- Documentation (Training, Reporting)

- A model that has been fine-tuned on existing system state will have the capability to extract employee obligations specific to their roles, and can be used to automatically create employee trainings.

- Similarly, a model that has been fine-tuned on existing system state and used to produce a risk assessment will have the capability to extract risks specific to the organization and can be used to automatically create compliance reports for board or auditors.

- Further, such a model will have the capability to extract compliance obligations to third parties (such as breach notification) and can be used to automatically create breach response plans outlining such obligations.

Conclusion

The compliance co-pilot can significantly reduce the burden for organizations in managing their compliance operations. Because the model is privately hosted by the organization and trained on its own data, it helps keep it secure as well as tailored to meet the organization's specific needs.

Connect with us

If you would like to reach out for guidance or provide other input, please do not hesitate to contact us.