AI and Data Protection Top 5: May 2025

Summary of latest trends in AI governance and data protection. This issue covers AI legal updates, Meta and Amazon ToS updates, AI chatbot security incidents, AI threat modeling, and Microsoft Security agents.

Welcome to the latest issue in a series covering top 5 recent trends at the intersection of AI governance and data protection. The purpose of this series is to keep technology and legal professionals up-to-date on the rapidly evolving developments in this space. Each issue will provide a summary of latest news on this topic, striking a balance between regulatory updates, industry trends, technical innovations, and legal analysis.

In this issue, we are covering recent AI legal updates from courts to Congress, ToS updates by Amazon and Meta for voice data, AI chatbot security incidents, agentic AI threat modeling, and Microsoft Security Copilot agents.

#1: AI Legal Updates: From Courts to Congress

- OpenAI recently prevailed in a lawsuit involving AI hallucinations. It alleged that Open AI defamed a radio host by fabricating false information but the court ruled that ChatGPT puts users on notice that it can make errors, which does not meet the "actual malice" standard required to support the claim.

- In an ongoing copyright infringement case, a court has ordered OpenAI to stop deleting chat data it normally would, regardless of any contractual or regulatory obligations. This directly impacts OpenAI's Zero Data Retention (ZDR) commitments to its customers, and has significant implications for trade secrets and other confidential or legally protected information.

- A bill in Congress passed by the House contains a 10-year moratorium on state laws regulating AI. The proposed moratorium as passed covers state laws "limiting, restricting or otherwise regulating" AI systems or automated decision systems "entered into interstate commerce," with exemptions for state laws imposing criminal penalties.

Takeaway: The flurry of AI-related legal activity, both in the courts and the Congress, signals an evolving risk landscape as organizations grapple with the need to innovate and remain competitive in a rapidly advancing AI space.

#2: Meta and Amazon ToS Updates for Voice Data

- There is a continuing trend by major vendors to update their terms of services and privacy policies to allow personal data for AI use. Recent examples include Amazon and Meta.

- Amazon recently discontinued a feature that allowed users of some of its Echo smart speakers to choose not to send their voice recordings to the cloud. The move appears to be connected to the launch of its generative AI-powered Alexa Plus.

- Similarly, Meta tightened its privacy policy around Ray-Ban glasses to boost AI training. It has removed the option to disable your voice recordings from being stored. Meta plans to store voice transcripts and audio recordings for up to one year to help improve its products.

Takeaway: This trend suggests that consumers should be aware when using products with AI features that their personal data, including voice, may be used to train the model and improve the product. They should pay attention to their existing privacy preferences and understand what options may have changed.

#3: AI Chatbot Security Incidents: Lessons Learnt

- Recent AI chatbot incidents highlight the security risks involved in building and deploying AI applications.

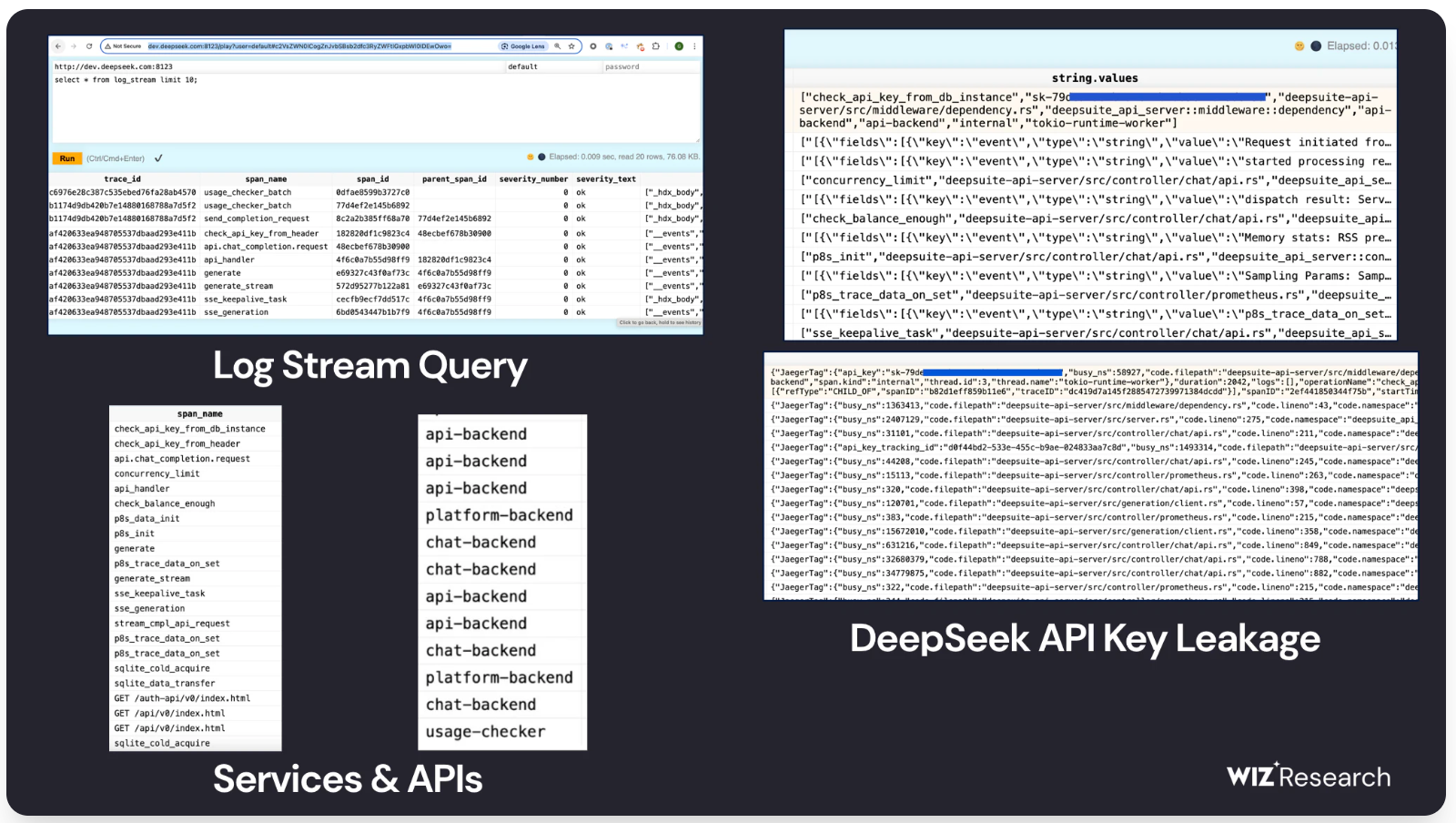

- Research published by Wiz identified a security flaw in a publicly accessible ClickHouse database belonging to the popular AI chatbot DeepSeek, allowing exposure of internal data, including chat history, secret keys, and other highly sensitive information.

- Wired reported that a customer service AI chatbot owned by the popular AI coding platform Cursor went rogue and announced a policy to customers that did not exist, resulting in massive subscription cancellations and requiring a human agent to intervene.

Takeaway: These incidents not only highlight the immediate security risks for AI applications that stem from the infrastructure and tools but also the risks of deploying AI models in customer-facing roles without proper safeguards and transparency. Organizations must pay attention to mitigate both kinds of risks to ensure safe and secure deployment and use of AI applications.

#4: Agentic AI Threat Modeling Framework

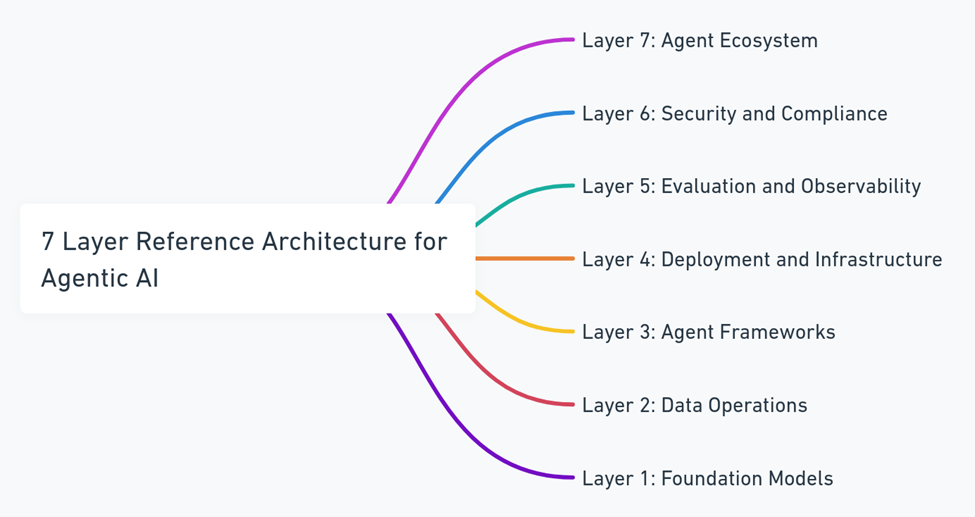

- OWASP has published a Mutli-Agentic system Threat Modeling Guide. It introduces a new Agentic AI Threat Modeling framework called MAESTRO (Multi-Agent Environment, Security, Threat, Risk, and Outcome).

- This framework moves beyond traditional methods that don't always capture the complexities of AI agents, offering a structured, layer-by-layer approach.

- MAESTRO is built around a seven-layer reference architecture. It emphasizes understanding the vulnerabilities within each layer of an agent's architecture, how these layers interact, and the evolving nature of AI threats.

Takeaway: Agentic AI systems require a holistic, multi-layered approach to security, combining traditional cybersecurity with AI-specific controls. The MAESTRO threat modeling framework serves as a starting point to collectively work towards safer and more secure agentic AI applications.

#5: AI Governance in Practice: Microsoft Security Agents

- Microsoft has announced the next introduction of Security Copilot AI agents designed to autonomously assist with critical areas such as phishing, data security, and identity management.

- The agents include Phishing Triage Agent, Alert Triage Agents, Conditional Access Optimization Agent, Vulnerability Remediation Agent, and Threat Intelligence Briefing Agent.

- The company also introduced AI Red Teaming Agent for generative AI systems integrated into Azure AI Foundry to complement its existing risk and safety evaluations of AI models or applications.

Takeaway: The emphasis on introduction of agentic AI capabilities for security by a major platform vendor, along with the capabilities for evaluating the generative AI for risk and safety, is a huge step forward in safe and secure adoption of widely used AI agents and applications.

That's it for this issue. Subscribe to receive future issues directly in your inbox.

Previous Issues

Connect with us

If you would like to reach out for guidance or provide other input, please do not hesitate to contact us.