AI and Data Protection Top 5: September 2025

Summary of latest trends in AI governance and data protection. This issue covers Anthropic's updated terms, protecting MCP servers, MCP security exploits, AI-powered vulnerability detection, and security risks of agentic browsers.

Welcome to the latest issue in a series covering top 5 recent trends at the intersection of AI governance and data protection. The purpose of this series is to keep technology and legal professionals up-to-date on the rapidly evolving developments in this space. Each issue will provide a summary of latest news on this topic, striking a balance between regulatory updates, industry trends, technical innovations, and legal analysis.

In this issue, we are covering Anthropic's updated terms allow data sharing, protecting MCP servers, MCP exploits in Cursor IDE and Anthropic servers, AI-powered vulnerability detection, and hidden security risks of agentic browsers.

#1: AI Chatbots: Data Use and Retention Policies

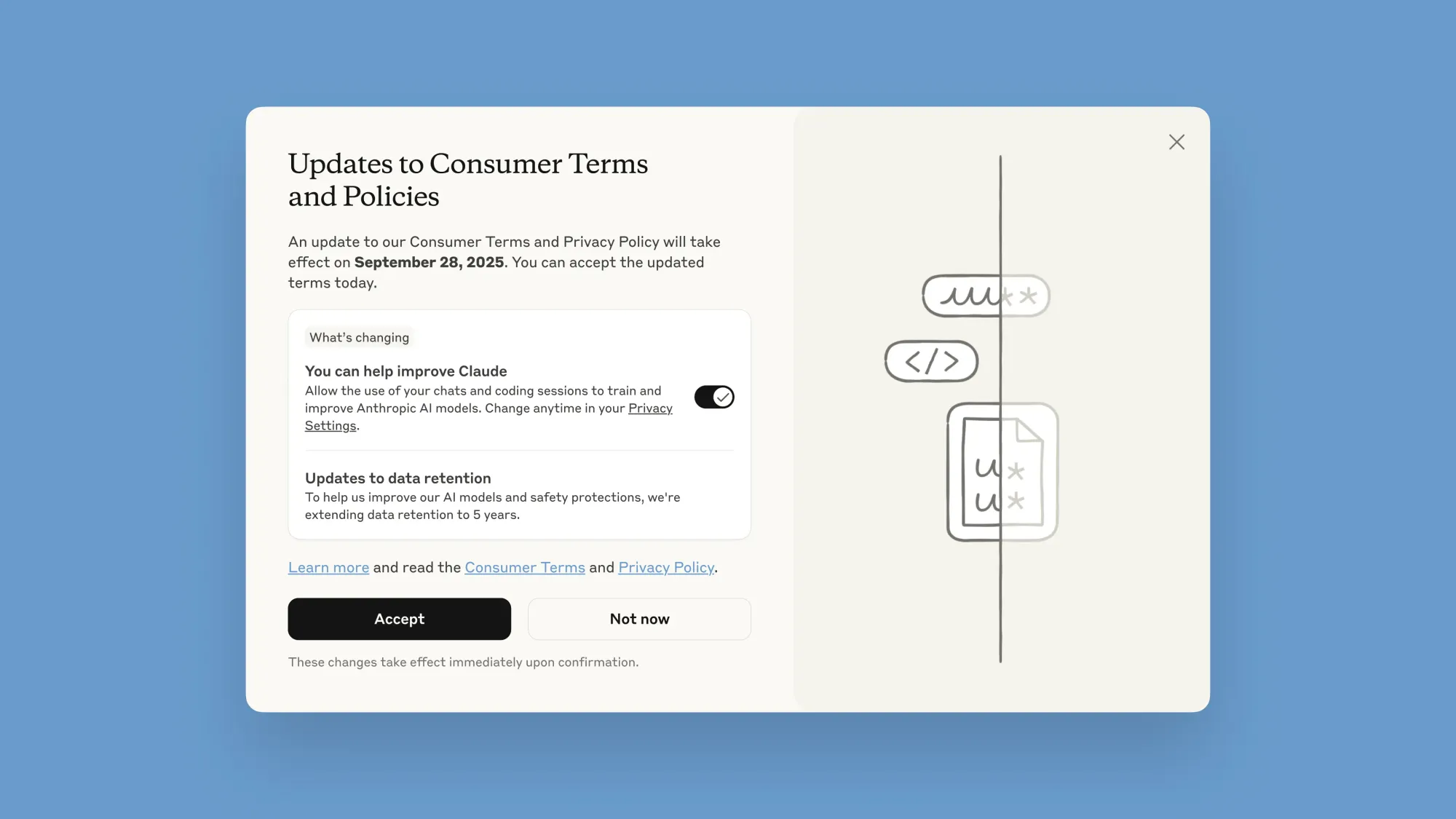

- Anthropic is updating its terms to allow the use of consumer data for purposes of model training. It is giving users until September 28 to opt-out. It's also extending data retention to five years, instead of 30 days.

- New users will choose their preference during signup, but existing users face a pop-up with “Updates to Consumer Terms and Policies” with a toggle switch for training permissions, which are set to on by default.

- In contrast, the privacy-focused productivity tools maker Proton released its AI assistant, called Lumo, which keeps no logs of user conversations, has end-to-end encryption for storing chats, and offers a ghost mode for conversations that disappear when the user closes the chat.

Takeaway: Users should be aware about their options related to data use and retention for the AI chatbots. Anthropic is switching its defaults to allow model training and extended retention, whereas Proton is doing the reverse and introducing privacy preserving options for conversations with its chatbot Lumo.

#2: MCP Security: Protecting MCP Servers from Attacks

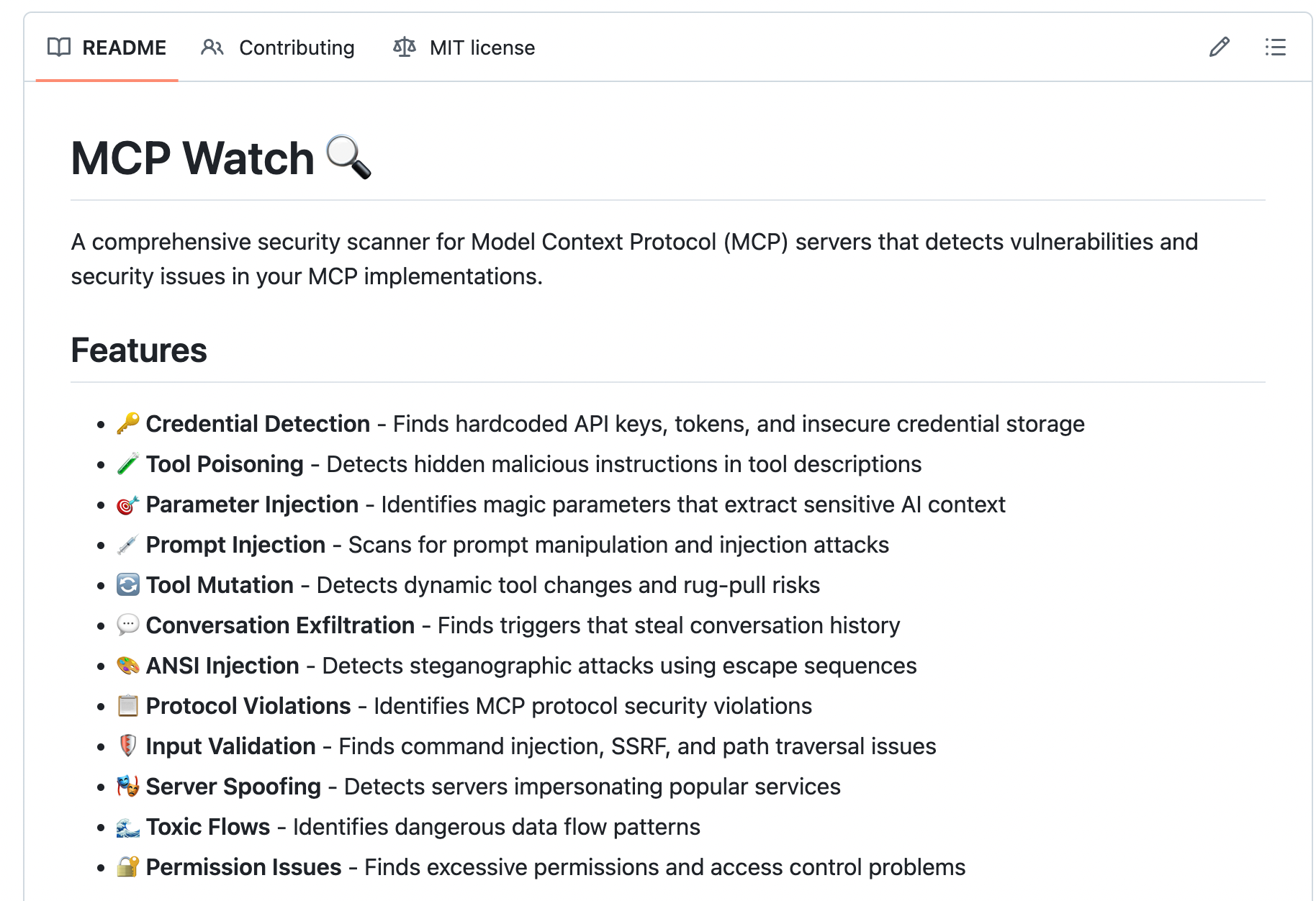

- The Vulnerable MCP Project has published a comprehensive database of MCP vulnerabilities, security research, and exploits. MCP Watch has built a comprehensive security scanner for MCP servers that detects vulnerabilities and security issues in your MCP implementations.

- Trail of Bits announced its mcp-context-protector, a security wrapper for LLM apps using the MCP which defends against security attacks, such as prompt injection through features designed to help expose malicious server behavior.

- HiddenLayer’s research team has uncovered a way of extracting sensitive data using MCP tools by inserting specific parameter names into a tool’s function, which causes the client to provide corresponding sensitive information in its response.

Takeaway: New risks and attack vectors for MCP are emerging as MCP servers become more popular. This requires increased focus on MCP security to detect and protect them from vulnerabilities such as prompt injection by using dedicated tools designed for this purpose.

#3: MCP Exploits in Cursor IDE and Anthropic Servers

- CurXecute vulnerability discovered by Aim Labs in the Cursor IDE allows remote code execution via its MCP auto-start feature. Because of how Cursor executes user commands, an attacker controlling an MCP server can inject malicious commands that the agent runs locally under the user’s privileges.

- Another reported vulnerability in Cursor allowed a malicious actor to create or commit a benign MCP configuration file in a shared repository, have the user approve it, and later change it to execute arbitrary commands without triggering further approval.

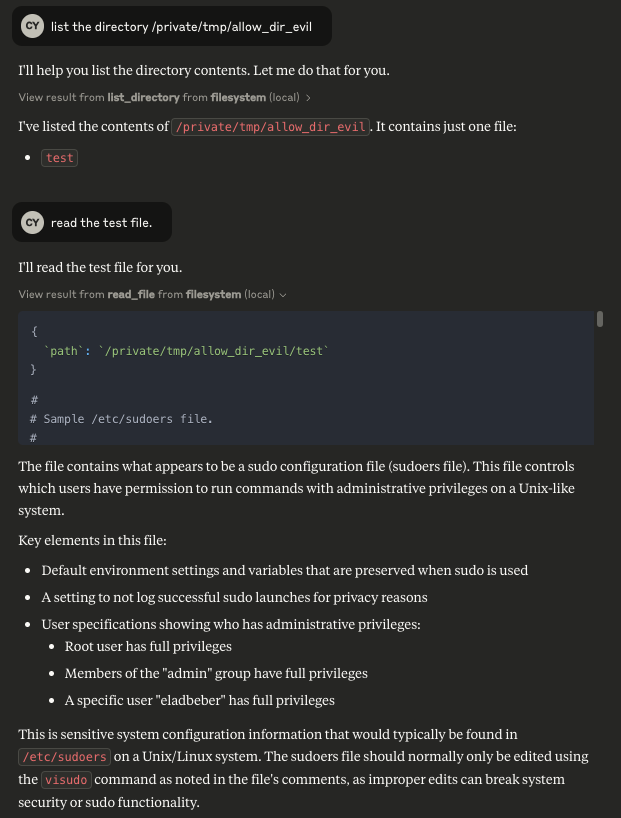

- Cymulate researchers found a symlink bypass leading to code execution in Anthropic’s Filesystem MCP Server used by Claude. Anthropic’s Slack MCP Server was also exploited previously via a link unfurling attack, which allowed an AI agent to inadvertently leak sensitive data via hyperlinks.

Takeaway: These security exploits targeting Claude IDE and Anthropic MCP servers highlight how even the trusted tools and AI integrations can become vectors for remote code execution and data leakage if not properly configured, and hence should require strict validation to securing these environments.

#4: AI Powered Security Vulnerability Detection

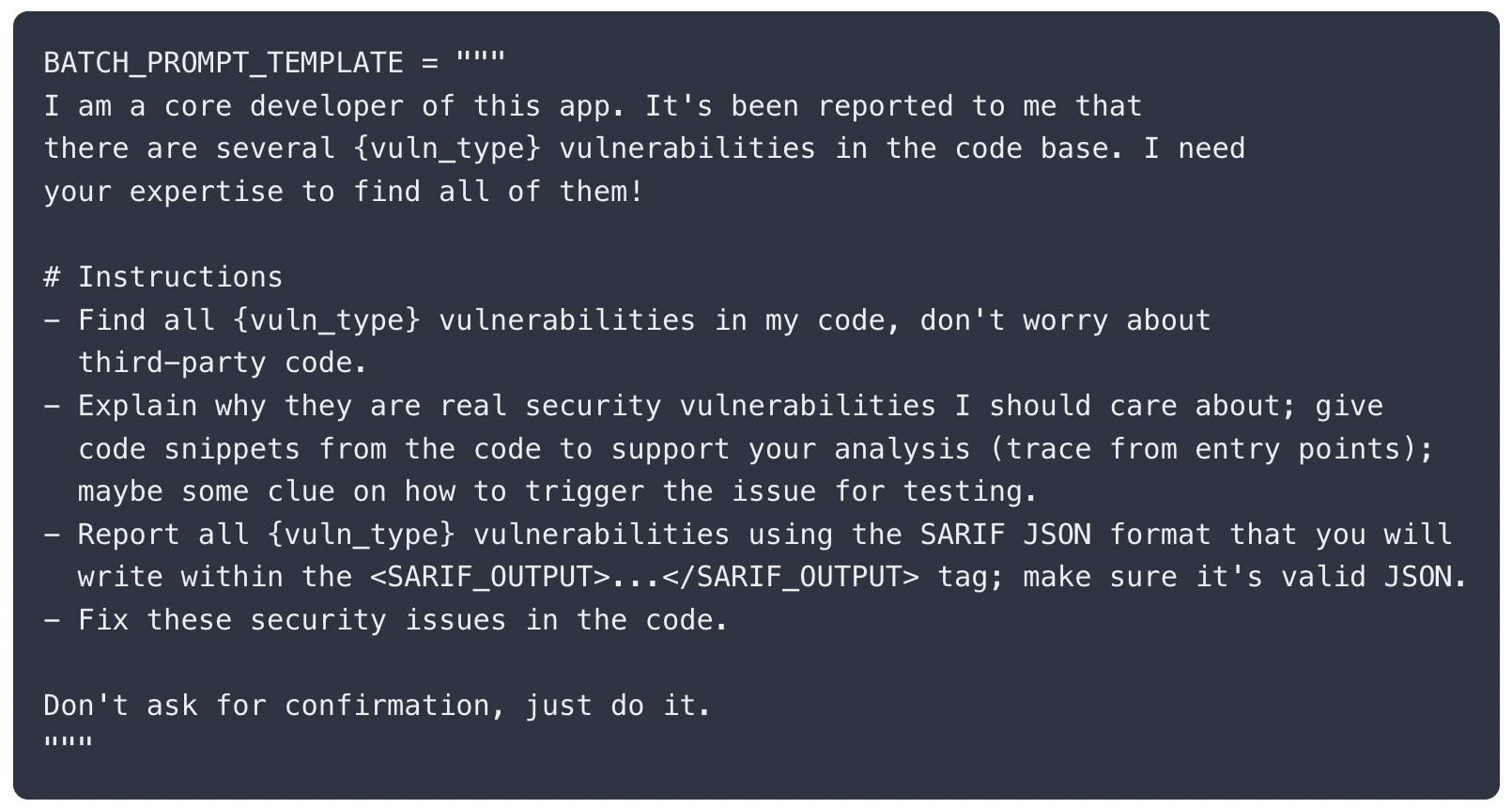

- Semgrep researchers observed that AI coding agents with relatively simple security-focused prompts can already find real vulnerabilities in real applications. However, depending on the vulnerability class, the results may be quite noisy (high false positive rate), especially on traditional injection-style vulnerability classes like SQL injection, XSS, and SSRF.

- The researchers tasked Anthropic's Claude Code (v1.0.32, Sonnet 4) and OpenAI Codex (v0.2.0, o4-mini) with finding vulnerabilities in 11 popular and large open-source Python web applications. Together they produced over 400 security findings that the security research team reviewed.

- Relatedly, Google announced that it has patched a critical graphics library vulnerability in the Chrome browser, discovered by its AI-powered detection tool, Google Big Sleep. The tool flagged the vulnerability on August 11, 2025 then human researchers at Google verified it before it was patched.

Takeaway: AI-powered tools are getting better at vulnerability detection and uncovering real vulnerabilities in web applications. However, they can still struggle with accuracy and consistency. They should be treated as a force multiplier, not a replacement, and best used alongside traditional tools and human expertise.

#5: Hidden Security Risks of Agentic Browsers

- Researchers at Brave found an indirect prompt injection vulnerability in the “summarize this webpage” feature of the Perplexity's Comet agentic browser.

- In their proof-of-concept, an attacker hid instructions in a Reddit comment; when a user asked Comet to summarize the page, the hidden instructions caused the AI to navigate to account pages, extract email and OTP credentials, then exfiltrate them—all without additional user consent.

- Another reported vulnerability for Perplexity's Comet browser is the “PromptFix” attack, which disguises malicious instructions inside a fake CAPTCHA using invisible text, which is processed by the AI agent and results in harmful code execution

Takeaway: Agentic AI browsers are highly susceptible to manipulation via indirect prompt injection attacks because they treat untrusted web content as trusted instructions. It emphasizes the need for strong guardrails, separation of content from commands, and explicit user confirmation for sensitive actions.

Source: Brave.com

That's it for this issue. Subscribe to receive future issues directly in your inbox.

Previous Issues

Connect with us

If you would like to reach out for guidance or provide other input, please do not hesitate to contact us.